Timeline of generative AI

From Alan Turing’s early work to today’s transformers, generative AI has advanced. Explore the timeline of generative AI.

Generative ai history timeline

Learning about generative AI helps explain its breakthroughs. Generational AI models create high-quality images, text, audio, synthetic data, and more. These models often learn from content dataset patterns and relationships to create new content. Most foundational models predict the next word using large language models (LLMs) trained on natural language.

Why Is Generative AI Important?

Recently, generative AI has rapidly evolved, changing how machines understand and interact with humans. Generative AI can create content instead of classifying or analyzing data, a major AI advancement. Today, companies can build customized models on foundational models to quickly adapt to many downstream tasks without task-specific training.

Generative AI 1940s–1960s

The mid-20th century birth of AI gave rise to generated AI, which has garnered attention recently.

1950s: Claude Shannon publishes his “Information Theory,” laying the foundation for data compression and communication, crucial for AI development

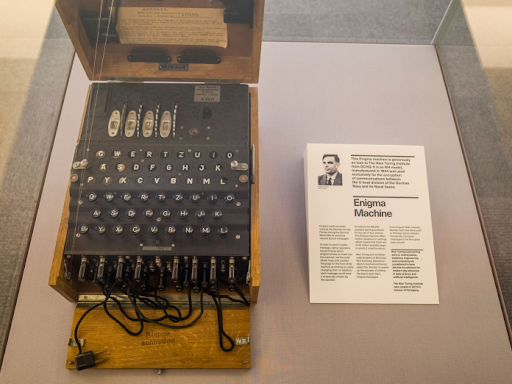

The 1947 paper by Alan Turing on whether machines could detect rational behavior introduced “intelligent machinery”. In 1950, he proposed the Turing Test, where a human judge evaluates text-based conversations between a human and a machine that mimics human responses. If the evaluator couldn’t tell it from a human, the machine passed.

1960s: ELIZA, an early chatbot, simulates conversation using pattern matching

Early generative AI systems included British scientist Joseph Weizenbaum’s 1961 ELIZA chatbot. The first talking computer program that simulated psychotherapy, ELIZA, had text-based conversations with basic responses.

Generative AI 1980s–2010s

As machine learning algorithms improved, generative AI let machines learn from data and improve.

An RNN/LSTM network

In the late 1980s and 1997, RNNs and LSTM networks improved AI systems‘ sequential data processing. Understanding order dependence helped LSTM solve speech recognition and machine translation problems.

1990s: Generative adversarial networks (GANs) are conceived as a training approach for neural networks

The unsupervised ML algorithm GAN uses two competing neural networks. Model-generating networks generate content, while discriminative networks verify it. With many attempts, the generator will produce high-resolution images the discriminator cannot distinguish from real ones.

Image generation evolved alongside VAEs, diffusion models, and flow-based models.

2017: StyleGAN introduces improvements for generating high-fidelity and diverse images

Transformer 2017 models process natural language text sequences like RNNs. To capture context, Transformer models understand sentence wordplay. Transformers are more efficient and powerful than sequence-processing ML models because they process all parts at once.

2018: Generative AI demonstrations impress the public, like Google’s AI Duet composing music with humans

LLMs like OpenAI’s GPT use transformer architecture since 2018. GPTs chat with users, generate text, and perform many language tasks using deep learning neural networks. Code, content, complex research, and text translation can be automated and improved with GPTs. Its speed and scope make it valuable.

Generative AI 2020s

2020: OpenAI’s GPT-3 raises the bar for LLMs, sparking discussions about its potential and risks

Open Artificial Intelligence developed ChatGPT in November of 2022 and received one billion users during 5 days. By using OpenAI’s GPT-3.5, ChatGPT lets machines have coherent, context-aware conversations. ChatGPT can generate text and other content in preferred style, length, format, and detail.

Meta’s Llama revolutionised open-source AI development with its cutting-edge foundation language models. Despite smaller foundational models than GPT-3 and others, it performs similarly with less computational power. At Snapdragon Summit 2023, they demonstrated device-only AI assistant chat with the fastest Llama 2 7B on a phone.

PaLM, Google Gemini

The search engine released PaLM in April 2022 and kept it private until March 2023, when it launched an API. PaLM scaled to 540 billion parameters, another NLP breakthrough.

The latest Google model, Gemini, is groundbreaking in performance and capabilities. It integrates text, code, audio, image, and video and optimizes for different sizes. Ultra, Pro, and Nano Gemini.

2022: OpenAI releases ChatGPT, a conversational AI model, to the public, leading to widespread adoption and ethical concerns

To ensure safety, Gemini conducts thorough safety evaluations and mitigates risk

The BigScience community of over 1,000 volunteer researchers founded BLOOM in July 2022. Multilingual model BLOOM generates coherent text in 13 programming languages and 46 languages. BLOOM, a large open-access AI model with 176 billion parameters, lets small businesses, individuals, and nonprofits innovate for free.

2023: Generative AI sees widespread adoption across various industries, with ethical debates continuing.

Advanced generative AI models DALL-E, Midjourney, and Stable Diffusion manipulate visual content from text. Realistic images are created by proprietary models DALL-E by OpenAI and Midjourney. Although open-source, Stable Diffusion produces high-quality images. They first showed Stable Diffusion on an Android phone in February 2023.

The impact and future of generative AI

Different industries could use generative AI. Teachers and healthcare professionals could use it to create learning plans and patient rehabilitation training. Graphic and fashion designers can design new logos, styles, and patterns. Personalized digital assistants can book travel, create diet and exercise plans, and pay bills. Developers code faster. Devices facilitate chat-like conversations. Generative AI can conduct nearly unlimited scientific research and analysis.

Generative AI for on-device applications alone can reduce cloud-based AI costs and energy consumption while improving data privacy and security, latency, performance, and contextual personalization.

Generative AI will advance machine capabilities and shape technology. Generative AI may replace laptops and shift processing from cloud to device. Personal AI assistants will make smartphones essential. Marketers and creatives will boost efficiency, productivity, and time-to-market. Extended reality will change world, and consumers will want devices to work across open ecosystems.

Since the 1950s, generative AI has grown in importance and innovation, with new innovations and demonstrations of its many capabilities released almost daily. Technology has improved industry creativity, efficiency, and innovation. Generative AI applications will likely advance and change AI use.

Understanding generative AI’s potential applications and impact on various industries requires staying current on its rapid development. Generative AI demonstrates technological advancement and limitless AI potential.

News source: Timeline of Generative AI

0 Comments